US Calls for AI Incident Reporting System

The Centre for Long-Term Resilience (CLTR) has recommended the establishment of a comprehensive incident reporting system to address critical gaps in AI regulation. This system is proposed to manage over 10,000 recorded safety incidents in AI, highlighting the need for robust regulatory frameworks to ensure the safe deployment of AI technologies.

The CLTR's call for action underscores the urgency to address potential safety

risks associated with AI development and deployment. By implementing an

incident reporting system, stakeholders can better understand the failure modes

and unintended consequences of AI systems, ensuring a proactive approach to

risk management.

For more details, visit the Source.

Source: Artificial Intelligence News

Recent Posts

Quantum Quill Media System Upgrade...

Generative AI in Film Production

CES 2024 Highlights to Groundbreaki...

iOS 18: A Glimpse into Apple's Futu...

Apple’s Latest AI Innovations: Enha...

Advancements in Multimodal AI

Unlocking Your Potential: How AI Ca...

Astra Bionics: Pioneering the Futur...

Medical Advances and Public Health

Apple Announces Latest Update: New...

Scheduled Maintenance Upgrade for Q...

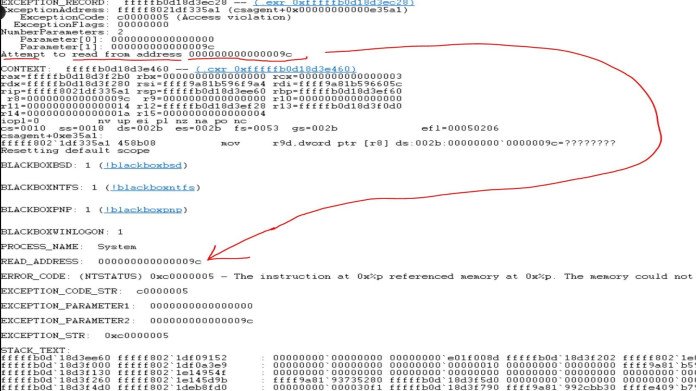

Analysis: The Cause Behind Crowdstr...

OpenAI Bans Misused AI Bot

From Sci-Fi to Reality: How AI and...

Revolutionizing Medical Application...

Yango Tech unveils AI shelf monitor...

Broadcom Challenges Nvidia in AI Ch...

AI-Powered Storytelling: Transformi...

Experience Life Uninterrupted:

Tech News: The Latest in AI and Rob...

Atlanta suburb becomes first to tes...

🚀 Historic Milestone: Elon Musk’s...

Quantum Quill Media Announces Strat...